Free Online Productivity Tools

i2Speak

i2Symbol

i2OCR

iTex2Img

iWeb2Print

iWeb2Shot

i2Type

iPdf2Split

iPdf2Merge

i2Bopomofo

i2Arabic

i2Style

i2Image

i2PDF

iLatex2Rtf

Sci2ools

118

Voted

ICCV

2009

IEEE

2009

IEEE

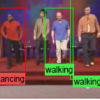

Learning Actions From the Web

This paper proposes a generic method for action recognition

in uncontrolled videos. The idea is to use images

collected from the Web to learn representations of actions

and use this knowledge to automatically annotate actions

in videos. Our approach is unsupervised in the sense that it

requires no human intervention other than the text querying.

Its benefits are two-fold: 1) we can improve retrieval of action

images, and 2) we can collect a large generic database

of action poses, which can then be used in tagging videos.

We present experimental evidence that using action images

collected from the Web, annotating actions is possible.

Related Content

| Added | 13 Jul 2009 |

| Updated | 10 Jan 2010 |

| Type | Conference |

| Year | 2009 |

| Where | ICCV |

| Authors | Nazli Ikizler-Cinbis, R. Gokberk Cinbis, Stan Sclaroff |

Comments (0)