Free Online Productivity Tools

i2Speak

i2Symbol

i2OCR

iTex2Img

iWeb2Print

iWeb2Shot

i2Type

iPdf2Split

iPdf2Merge

i2Bopomofo

i2Arabic

i2Style

i2Image

i2PDF

iLatex2Rtf

Sci2ools

131

click to vote

ICCV

2003

IEEE

2003

IEEE

Unsupervised Improvement of Visual Detectors using Co-Training

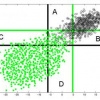

One significant challenge in the construction of visual detection systems is the acquisition of sufficient labeled data. This paper describes a new technique for training visual detectors which requires only a small quantity of labeled data, and then uses unlabeled data to improve performance over time. Unsupervised improvement is based on the co-training framework of Blum and Mitchell, in which two disparate classifiers are trained simultaneously. Unlabeled examples which are confidently labeled by one classifier are added, with labels, to the training set of the other classifier. Experiments are presented on the realistic task of automobile detection in roadway surveillance video. In this application, co-training reduces the false positive rate by a factor of 2 to 11 from the classifier trained with labeled data alone.

Co-training Framework | Computer Vision | ICCV 2003 | Sufficient Labeled Data | Unlabeled Data | Unlabeled Examples | Visual Detection Systems |

Related Content

| Added | 15 Oct 2009 |

| Updated | 31 Oct 2009 |

| Type | Conference |

| Year | 2003 |

| Where | ICCV |

| Authors | Anat Levin, Paul A. Viola, Yoav Freund |

Comments (0)