Free Online Productivity Tools

i2Speak

i2Symbol

i2OCR

iTex2Img

iWeb2Print

iWeb2Shot

i2Type

iPdf2Split

iPdf2Merge

i2Bopomofo

i2Arabic

i2Style

i2Image

i2PDF

iLatex2Rtf

Sci2ools

ICPR

2004

IEEE

2004

IEEE

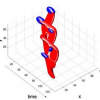

Velocity Adaptation of Space-Time Interest Points

The notion of local features in space-time has recently been proposed to capture and describe local events in video. When computing space-time descriptors, however, the result may strongly depend on the relative motion between the object and the camera. To compensate for this variation, we present a method that automatically adapts the features to the local velocity of the image pattern and, hence, results in a video representation that is stable with respect to different amounts of camera motion. Experimentally we show that the use of velocity adaptation substantially increases the repeatability of interest points as well as the stability of their associated descriptors. Moreover, for an application to human action recognition we demonstrate how velocityadapted features enable recognition of human actions in situations with unknown camera motion and complex, nonstationary backgrounds.

Computer Vision | Features Enable Recognition | ICPR 2004 | Space-time Descriptors | Unknown Camera Motion |

| Added | 09 Nov 2009 |

| Updated | 09 Nov 2009 |

| Type | Conference |

| Year | 2004 |

| Where | ICPR |

| Authors | Ivan Laptev, Tony Lindeberg |

Comments (0)